Did Claude Code Lose Its Mind, Or Did I Lose Mine?

Claude and I drifted apart, and it turns out it was my fault.

Yes, I have been clauding — as regular readers know. I’ve spent hours and many hundreds of dollars deep in the roguelike latent space exploration loop, finding and collecting Markdown PRDs, work plans, revisions, revisions of revisions, and eventually some production code.

Or, at least, I was doing all this. At some point a few weeks ago, I got out of the obsessive coding phase because other startup duties demanded my attention. Meetings, product stuff, customer support, and the like all took me out of the coding tunnel and back into Google Meets and Slack.

When I finally turned back to working on another feature Claude Code, I noticed something: the output sucked 👎.

Like, not just kind of a little worse, but terrible. Claude just wasn’t listening to me, and it was doing everything wrong. I also saw people complaining about this on my X.com feed, and some of the other engineers at Symbolic were also sharing Claude horror stories in a Slack channel we have dedicated to AI-assisted programming. (I recommend the practice of having such a channel, BTW).

But as I struggled with this, it occurred to me that perhaps it wasn’t really Claude that had drifted — perhaps it was me.

After some investigation, I came to the conclusion that most of the apparent decline in Claude Code’s quality was actually my fault. Here’s what happened.

The (semi-)successful port

One of my earlier Claude Code discoveries involved porting LangChain’s Markdown chunker from Python to Elixir. I want to talk through the steps of how I did this because it’s a good, practical example of how to use AI-assisted programming tools to get good results.

1️⃣ First, I cloned into the LangChain repo and booted up Claude Code in it. I had already identified the name of the specific Markdown chunker module that I wanted to port to Elixir (MarkdownTextSplitter). So I then pointed Claude at it and first asked it to examine the chunker, and then use the code and the associated notebook in the repo to write some failing tests in Elixir for it. Just a few basic failing tests — nothing too elaborate.

2️⃣ Then I focused Claude on getting those Elixir tests passing by asking it to look at the Python for inspiration when coding the Elixir version. Note that I didn’t “vibe code” this in the classic sense, either — I stayed focused during the whole session, evaluating proposed code and changes, and giving guidance when rejecting code. (I wrote the general-purpose, recursive chunker we use internally from first principles maybe a year ago, and it supports Markdown and a few other formats, so I know what I’m doing here.)

I ended up getting the tests to pass and getting the new chunker in pretty good shape. I got it cleaned up and merged, and began experimenting with it on and off.

But here’s why I described the port as “semi-successful”: I never fully put the new chunker into production. It was part of some planned work, but because chunking is so critical to everything we do at Symbolic, I wanted to test it more before replacing the existing, battle-tested chunker with it.

But I didn’t make a Linear ticket for this small bit of work (yeah, I know, it’s my fault), so it got lost in the shuffle and sat there for a few weeks unused apart from some experiments.

The attempted reboot

Some weeks later, I was working on a different feature, and having completely forgotten about the previous few hours’ worth of (mostly Claude) work, I thought to myself: “hey, I need a dedicated Markdown chunker… I know my current implementation isn’t entirely what I want, so I’ll make some failing tests for a new version and Claude it out.”

🗯️ So Claude started refactoring some module in my code called MarkdownChunker, and I was like “wait, what is this module?!” Again, I had forgotten that I had done this already in a previous session. I simply had no memory of it whatsoever, probably because I didn’t write it.

In this second session, I assumed, based on the name, that this module was just a wrapper around my current production chunker, but just set up to only use Markdown separators instead of taking in any old list of separators as an argument. So I didn’t look at it any further, and I just let Claude cook, turning on “auto-accept” so the bot could work on the problem while I did something else.

😡 When it was finished, what Claude had done with this module was frankly astonishing — in a very bad way. The bot had copied large portions of the text from my test file into the production code, and then added branching conditionals and pattern-matching so that the module would now chunk only that specific content from the test file. In other words, the new module code would figure out if it had been given one of the test Markdown segments, and it would just dumbly return the hard-coded chunks.

On seeing this, I sternly directed Claude to never do this, and I went to some lengths to lecture it about producing only generalizable solutions that had no awareness of the specifics of the test file.

Claude told me it understood everything I was saying, and it repeated it all back to me in its own words to confirm. Then I let it run again to clean up its mess. When I next checked in on it, I found it had done the exact same thing as before — the whole “production” module was still fake and was still designed solely to pass the tests.

It was at this point that I finally stopped multitasking and I focused all my attention on this specific problem, to try and understand what was happening. I rewound and looked at the version of the MarkdownChunker module that Claude had started out with in this session, and saw that it was complex and accommodated tons of Markdown corner cases. Then it all started to come back to me — I remembered doing all the steps I described in the previous section, and realized that I had basically played and beaten this level before.

Ok wow. So the first time around, I got this great output that I ultimately didn’t do anything with. And the second time around, I got the worst garbage I’d ever seen from a model since GPT-3 days. What is happening?!

I eventually nuked the branch and declared to the team that Claude had lost its mind. I warned the devs to be wary of using Claude Code for anything important, and I went back to testing Cursor, PearAI, RepoPrompt, and a few other tools with other models like Gemini.

Post-mortem of the reboot

If you’ve been following along with my last few newsletter posts, you may have already spotted the many mistakes I made in the above story. And I don’t just mean basic failures of software engineering — like how I forgot to make a linear ticket to follow up on the work, I left an unused module in production and never fully implemented it, I literally forgot that this entire module was there, and so on.

No, the second time around with the Markdown chunker, I did all the AI stuff wrong.

I had settled into a comfortable groove with the bot, and I had subtly started changing the way I used it. I wasn’t paying nearly as close attention, and I had the sense in my sessions that the bot was drifting and degrading in performance, but in hindsight, it was I who was doing most of the drifting and degrading.

Somewhere in the previous few weeks, I had started to relate to the bot as a junior programmer that I had a sort of mind-meld with, where I could speak to it in shorthand and not spell everything out. And as I began to relate to Claude Code this way, I started to relax my vigilance, take shortcuts, skip steps, and generally abandon the process that had worked so well early on.

I was unintentionally giving the bot bigger and bigger bites to chew on, with less and less active direction and focused oversight. And as I was slowly slipping into this mode with Claude, I was getting more and more frustrated with the output.

When all of this started to come together for me, I went back to Claude Code for a new feature and fully engaged the earlier process that I had described in my previous posts, i.e. start with exploration and Markdown descriptions of the work, break everything up into small bites, do a little at a time, closely monitor all proposed code changes, manage the context window so that it stays “cool”, etc.

Needless to say, the quality of the output jumped all the way back up again. Maybe there really was some model drift in there that degraded the results — I have no way of verifying this. But in hindsight, I’m convinced that 90% of the problem was user error. I had gotten comfortable, lazy, and overconfident in the model’s capabilities, and as I did that, the output quality started to collapse.

Lessons learned

I’ve drawn a few lessons from this experience, and also from the fact that I’m seeing regular “Claude fell off” tweets, along with indicators that founders are having a hard time getting their teams to keep using these tools to maximize productivity after the initial “wow” factor wears off.

I think what I’ve described so far in this post is behind a lot of this phenomenon. Hopefully, the following lessons will help others who find themselves in this same situation.

You & the bot are a coupled system

🤝 When you’re really in the zone with an AI assistant, and you’re actively steering it through latent space in a targeted search for the output you need, you and the LLM become a kind of closed, coupled system.

What this means on a practical level is that if one of you starts to change their behavior, the performance of the system as a whole will change in unpredictable ways.

The only way to avoid this kind of drift is to monitor, measure, and validate outcomes against some fixed benchmarks. Without this kind of practice, you’re guaranteed to drift.

But I don’t have any practical suggestions for what such a practice would look like, so in the absence of that, the next best thing is to have a fixed process that you stick to.

Stick to the process

☑️ There’s a reason that checklists are an old and effective tool in situations where you absolutely have to do all the things in the right order. Humans will drift, their attention will wane, they’ll take shortcuts, and they’ll often not even know they’re changing the process.

If you’re not working from a list — whether it’s written out or it’s captured in an acronym or other mnemonic — then you, too, will accidentally and gradually abandon a formula that works.

Have a process for helping others stick to the process

💬 My own plan is to start checking in with our engineers in our 1-1 sessions and ask them how their AI-assisted coding is going. Are they still using the tools, or are they using any new ones that they’re excited about? Have they noticed any changes in output quality, and if so, what steps did they take to investigate and/or mitigate?

I suspect I’m going to have to be as deliberate with teammates as I am with Claude Code itself, in terms of consistently working through all the steps in a defined process that has at least a vaguely measurable outcome.

We need better tools

🔨 Nothing in the chat interface that dominates AI right now encourages you to do any of the practices that I’ve described in my newsletter and that will make your LLM sessions a success.

Indeed, the chat interface explicitly encourages users to attempt to one-shot an entire project with the least possible amount of typing.

Think about how much of a pain it is to type anything long into nearly every chatbot UI, from Claude Code to OpenAI’s chat. If you hit “enter,” it immediately sends your message, instead of going to a newline. It’s like the UI is really trying to encourage you to type as little as possible, and to avoid breaking up the prompt into sections and paragraphs.

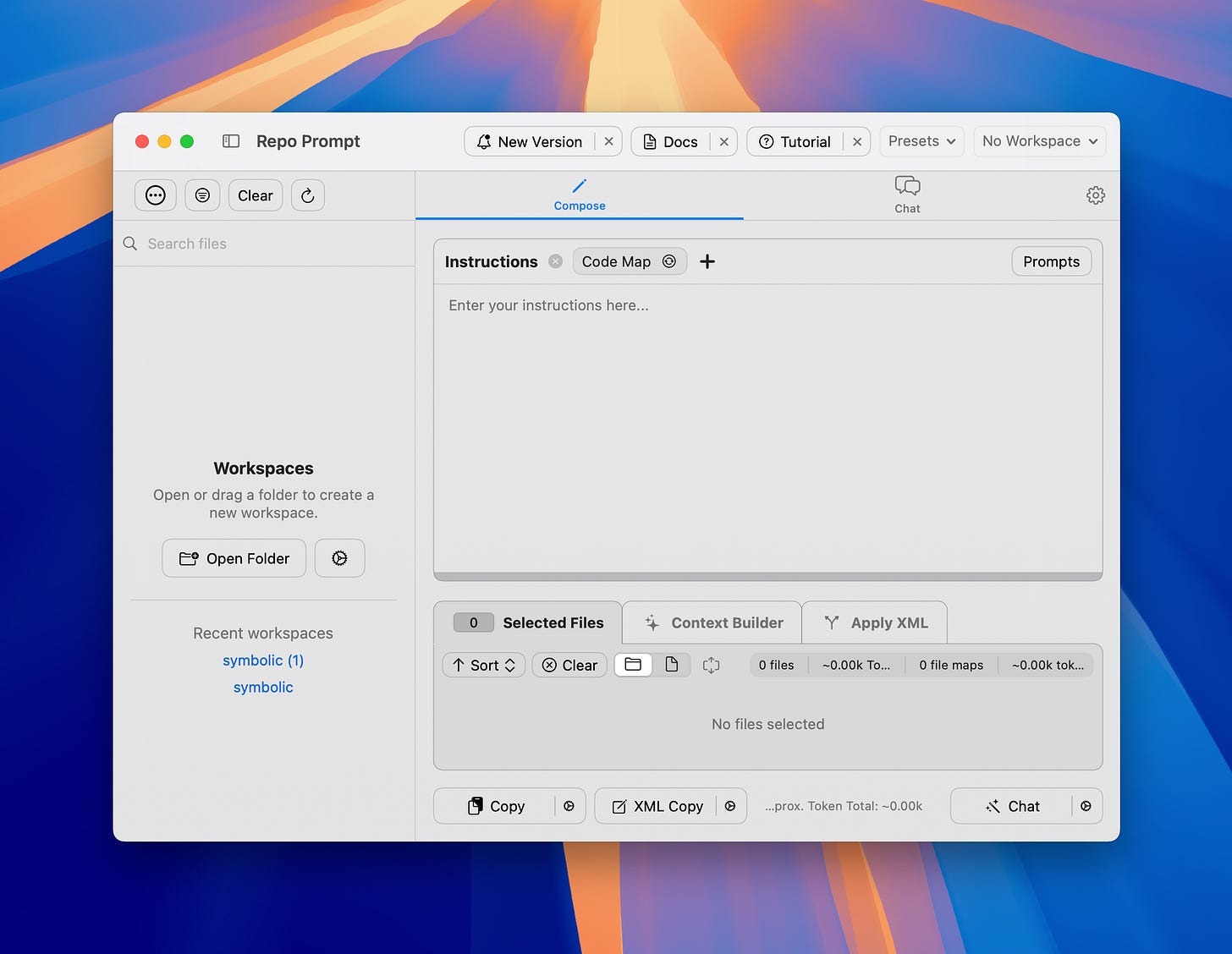

There are new tools that encourage you to compose longer prompt documents and to carefully assemble context for the bot, and I do like these and use them occasionally. But my default is still to type something out into a standard Markdown document and then either paste it into a chat UI or (in the case of Claude Code) point the bot at the file and instruct it to read it.

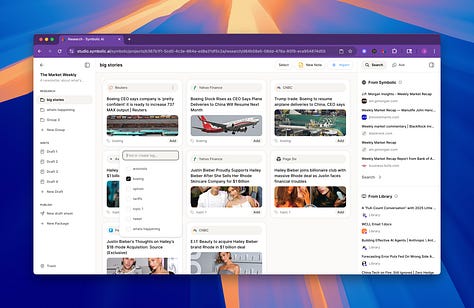

AI tools also rarely encourage you to break up work into sections. This is something we’ve taken seriously at Symbolic with our new section-based editor.

You can see in the first screenshot below how the research grid lets you tag specific assets.

Then, in the editor where you create drafts, you can filter the RAG inputs by the tags you assigned in the research grid. You can also assign specific format instructions on a per-section basis.

This combination of narrowing the RAG inputs via tagging and sub-draft-level instructions keeps the context window focused. The idea is to help you with dividing up a large text artifact, like a multi-section newsletter, into smaller bites that are easy for the model to nail on the first try.

In general, one of the primary things we’re trying to do at Symbolic is to structure the tools so that they encourage you to do the right thing. We want to nudge you into breaking up the work in a way that will tend to lead to more consistently better results.

It’s early days with this kind of work, but it’s going to be needed for the foreseeable future, at least as long as we’re working within the limitations of the current autoregressive LLM paradigm. Commentators and investors who aren’t trying to do real work with these models daily often claim large context windows or some other advancement will eliminate the need to focus the models on bite-sized chunks of work, but these people are all wrong.

I think the returns to good UX are massively increasing relative to the returns to effort and quality in most other layers of the software stack. But that’s a topic for another day.